Qualitative vs Quantitative Research Methods & Data Analysis

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

The main difference between quantitative and qualitative research is the type of data they collect and analyze.

Quantitative data is information about quantities, and therefore numbers, and qualitative data is descriptive, and regards phenomenon which can be observed but not measured, such as language.

- Quantitative research collects numerical data and analyzes it using statistical methods. The aim is to produce objective, empirical data that can be measured and expressed numerically. Quantitative research is often used to test hypotheses, identify patterns, and make predictions.

- Qualitative research gathers non-numerical data (words, images, sounds) to explore subjective experiences and attitudes, often via observation and interviews. It aims to produce detailed descriptions and uncover new insights about the studied phenomenon.

On This Page:

What Is Qualitative Research?

Qualitative research is the process of collecting, analyzing, and interpreting non-numerical data, such as language. Qualitative research can be used to understand how an individual subjectively perceives and gives meaning to their social reality.

Qualitative data is non-numerical data, such as text, video, photographs, or audio recordings. This type of data can be collected using diary accounts or in-depth interviews and analyzed using grounded theory or thematic analysis.

Qualitative research is multimethod in focus, involving an interpretive, naturalistic approach to its subject matter. This means that qualitative researchers study things in their natural settings, attempting to make sense of, or interpret, phenomena in terms of the meanings people bring to them. Denzin and Lincoln (1994, p. 2)

Interest in qualitative data came about as the result of the dissatisfaction of some psychologists (e.g., Carl Rogers) with the scientific study of psychologists such as behaviorists (e.g., Skinner ).

Since psychologists study people, the traditional approach to science is not seen as an appropriate way of carrying out research since it fails to capture the totality of human experience and the essence of being human. Exploring participants’ experiences is known as a phenomenological approach (re: Humanism ).

Qualitative research is primarily concerned with meaning, subjectivity, and lived experience. The goal is to understand the quality and texture of people’s experiences, how they make sense of them, and the implications for their lives.

Qualitative research aims to understand the social reality of individuals, groups, and cultures as nearly as possible as participants feel or live it. Thus, people and groups are studied in their natural setting.

Some examples of qualitative research questions are provided, such as what an experience feels like, how people talk about something, how they make sense of an experience, and how events unfold for people.

Research following a qualitative approach is exploratory and seeks to explain ‘how’ and ‘why’ a particular phenomenon, or behavior, operates as it does in a particular context. It can be used to generate hypotheses and theories from the data.

Qualitative Methods

There are different types of qualitative research methods, including diary accounts, in-depth interviews , documents, focus groups , case study research , and ethnography .

The results of qualitative methods provide a deep understanding of how people perceive their social realities and in consequence, how they act within the social world.

The researcher has several methods for collecting empirical materials, ranging from the interview to direct observation, to the analysis of artifacts, documents, and cultural records, to the use of visual materials or personal experience. Denzin and Lincoln (1994, p. 14)

Here are some examples of qualitative data:

Interview transcripts : Verbatim records of what participants said during an interview or focus group. They allow researchers to identify common themes and patterns, and draw conclusions based on the data. Interview transcripts can also be useful in providing direct quotes and examples to support research findings.

Observations : The researcher typically takes detailed notes on what they observe, including any contextual information, nonverbal cues, or other relevant details. The resulting observational data can be analyzed to gain insights into social phenomena, such as human behavior, social interactions, and cultural practices.

Unstructured interviews : generate qualitative data through the use of open questions. This allows the respondent to talk in some depth, choosing their own words. This helps the researcher develop a real sense of a person’s understanding of a situation.

Diaries or journals : Written accounts of personal experiences or reflections.

Notice that qualitative data could be much more than just words or text. Photographs, videos, sound recordings, and so on, can be considered qualitative data. Visual data can be used to understand behaviors, environments, and social interactions.

Qualitative Data Analysis

Qualitative research is endlessly creative and interpretive. The researcher does not just leave the field with mountains of empirical data and then easily write up his or her findings.

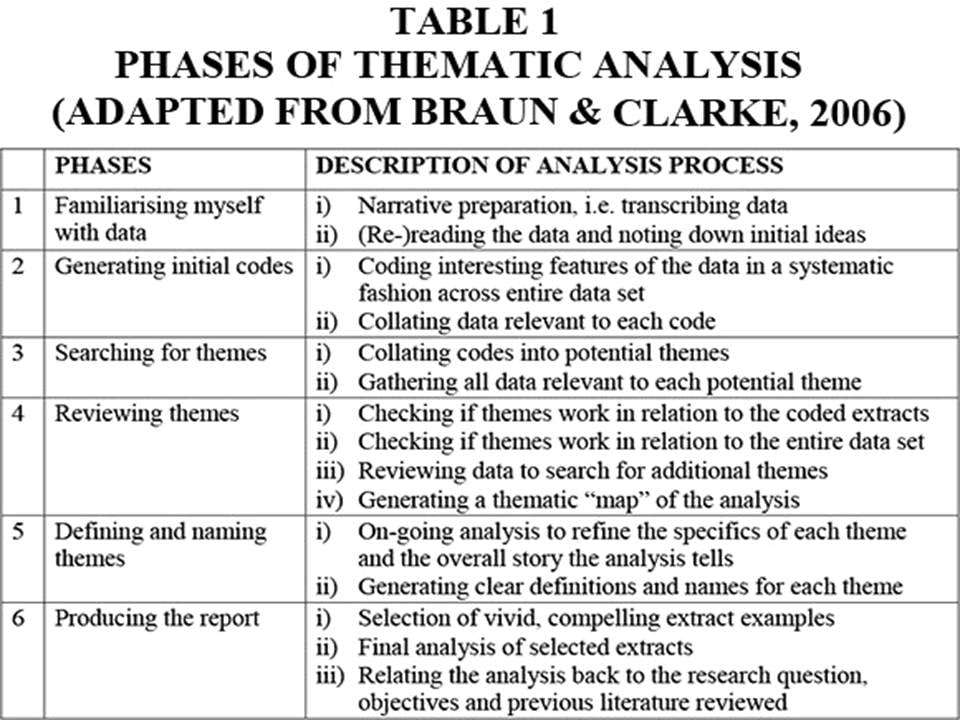

Qualitative interpretations are constructed, and various techniques can be used to make sense of the data, such as content analysis, grounded theory (Glaser & Strauss, 1967), thematic analysis (Braun & Clarke, 2006), or discourse analysis .

For example, thematic analysis is a qualitative approach that involves identifying implicit or explicit ideas within the data. Themes will often emerge once the data has been coded .

Key Features

- Events can be understood adequately only if they are seen in context. Therefore, a qualitative researcher immerses her/himself in the field, in natural surroundings. The contexts of inquiry are not contrived; they are natural. Nothing is predefined or taken for granted.

- Qualitative researchers want those who are studied to speak for themselves, to provide their perspectives in words and other actions. Therefore, qualitative research is an interactive process in which the persons studied teach the researcher about their lives.

- The qualitative researcher is an integral part of the data; without the active participation of the researcher, no data exists.

- The study’s design evolves during the research and can be adjusted or changed as it progresses. For the qualitative researcher, there is no single reality. It is subjective and exists only in reference to the observer.

- The theory is data-driven and emerges as part of the research process, evolving from the data as they are collected.

Limitations of Qualitative Research

- Because of the time and costs involved, qualitative designs do not generally draw samples from large-scale data sets.

- The problem of adequate validity or reliability is a major criticism. Because of the subjective nature of qualitative data and its origin in single contexts, it is difficult to apply conventional standards of reliability and validity. For example, because of the central role played by the researcher in the generation of data, it is not possible to replicate qualitative studies.

- Also, contexts, situations, events, conditions, and interactions cannot be replicated to any extent, nor can generalizations be made to a wider context than the one studied with confidence.

- The time required for data collection, analysis, and interpretation is lengthy. Analysis of qualitative data is difficult, and expert knowledge of an area is necessary to interpret qualitative data. Great care must be taken when doing so, for example, looking for mental illness symptoms.

Advantages of Qualitative Research

- Because of close researcher involvement, the researcher gains an insider’s view of the field. This allows the researcher to find issues that are often missed (such as subtleties and complexities) by the scientific, more positivistic inquiries.

- Qualitative descriptions can be important in suggesting possible relationships, causes, effects, and dynamic processes.

- Qualitative analysis allows for ambiguities/contradictions in the data, which reflect social reality (Denscombe, 2010).

- Qualitative research uses a descriptive, narrative style; this research might be of particular benefit to the practitioner as she or he could turn to qualitative reports to examine forms of knowledge that might otherwise be unavailable, thereby gaining new insight.

What Is Quantitative Research?

Quantitative research involves the process of objectively collecting and analyzing numerical data to describe, predict, or control variables of interest.

The goals of quantitative research are to test causal relationships between variables , make predictions, and generalize results to wider populations.

Quantitative researchers aim to establish general laws of behavior and phenomenon across different settings/contexts. Research is used to test a theory and ultimately support or reject it.

Quantitative Methods

Experiments typically yield quantitative data, as they are concerned with measuring things. However, other research methods, such as controlled observations and questionnaires , can produce both quantitative information.

For example, a rating scale or closed questions on a questionnaire would generate quantitative data as these produce either numerical data or data that can be put into categories (e.g., “yes,” “no” answers).

Experimental methods limit how research participants react to and express appropriate social behavior.

Findings are, therefore, likely to be context-bound and simply a reflection of the assumptions that the researcher brings to the investigation.

There are numerous examples of quantitative data in psychological research, including mental health. Here are a few examples:

Another example is the Experience in Close Relationships Scale (ECR), a self-report questionnaire widely used to assess adult attachment styles .

The ECR provides quantitative data that can be used to assess attachment styles and predict relationship outcomes.

Neuroimaging data : Neuroimaging techniques, such as MRI and fMRI, provide quantitative data on brain structure and function.

This data can be analyzed to identify brain regions involved in specific mental processes or disorders.

For example, the Beck Depression Inventory (BDI) is a clinician-administered questionnaire widely used to assess the severity of depressive symptoms in individuals.

The BDI consists of 21 questions, each scored on a scale of 0 to 3, with higher scores indicating more severe depressive symptoms.

Quantitative Data Analysis

Statistics help us turn quantitative data into useful information to help with decision-making. We can use statistics to summarize our data, describing patterns, relationships, and connections. Statistics can be descriptive or inferential.

Descriptive statistics help us to summarize our data. In contrast, inferential statistics are used to identify statistically significant differences between groups of data (such as intervention and control groups in a randomized control study).

- Quantitative researchers try to control extraneous variables by conducting their studies in the lab.

- The research aims for objectivity (i.e., without bias) and is separated from the data.

- The design of the study is determined before it begins.

- For the quantitative researcher, the reality is objective , exists separately from the researcher, and can be seen by anyone.

- Research is used to test a theory and ultimately support or reject it.

Limitations of Quantitative Research

- Context : Quantitative experiments do not take place in natural settings. In addition, they do not allow participants to explain their choices or the meaning of the questions they may have for those participants (Carr, 1994).

- Researcher expertise : Poor knowledge of the application of statistical analysis may negatively affect analysis and subsequent interpretation (Black, 1999).

- Variability of data quantity : Large sample sizes are needed for more accurate analysis. Small-scale quantitative studies may be less reliable because of the low quantity of data (Denscombe, 2010). This also affects the ability to generalize study findings to wider populations.

- Confirmation bias : The researcher might miss observing phenomena because of focus on theory or hypothesis testing rather than on the theory of hypothesis generation.

Advantages of Quantitative Research

- Scientific objectivity : Quantitative data can be interpreted with statistical analysis, and since statistics are based on the principles of mathematics, the quantitative approach is viewed as scientifically objective and rational (Carr, 1994; Denscombe, 2010).

- Useful for testing and validating already constructed theories.

- Rapid analysis : Sophisticated software removes much of the need for prolonged data analysis, especially with large volumes of data involved (Antonius, 2003).

- Replication : Quantitative data is based on measured values and can be checked by others because numerical data is less open to ambiguities of interpretation.

- Hypotheses can also be tested because of statistical analysis (Antonius, 2003).

Antonius, R. (2003). Interpreting quantitative data with SPSS . Sage.

Black, T. R. (1999). Doing quantitative research in the social sciences: An integrated approach to research design, measurement and statistics . Sage.

Braun, V. & Clarke, V. (2006). Using thematic analysis in psychology . Qualitative Research in Psychology , 3, 77–101.

Carr, L. T. (1994). The strengths and weaknesses of quantitative and qualitative research : what method for nursing? Journal of advanced nursing, 20(4) , 716-721.

Denscombe, M. (2010). The Good Research Guide: for small-scale social research. McGraw Hill.

Denzin, N., & Lincoln. Y. (1994). Handbook of Qualitative Research. Thousand Oaks, CA, US: Sage Publications Inc.

Glaser, B. G., Strauss, A. L., & Strutzel, E. (1968). The discovery of grounded theory; strategies for qualitative research. Nursing research, 17(4) , 364.

Minichiello, V. (1990). In-Depth Interviewing: Researching People. Longman Cheshire.

Punch, K. (1998). Introduction to Social Research: Quantitative and Qualitative Approaches. London: Sage

Further Information

- Mixed methods research

- Designing qualitative research

- Methods of data collection and analysis

- Introduction to quantitative and qualitative research

- Checklists for improving rigour in qualitative research: a case of the tail wagging the dog?

- Qualitative research in health care: Analysing qualitative data

- Qualitative data analysis: the framework approach

- Using the framework method for the analysis of

- Qualitative data in multi-disciplinary health research

- Content Analysis

- Grounded Theory

- Thematic Analysis

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Back to the Future of Quantitative Psychology and Measurement: Psychometrics in the Twenty-First Century

Pietro cipresso, jason c immekus.

- Author information

- Article notes

- Copyright and License information

Edited and reviewed by: Axel Cleeremans, Free University of Brussels, Belgium

*Correspondence: Pietro Cipresso [email protected]

This article was submitted to Quantitative Psychology and Measurement, a section of the journal Frontiers in Psychology

Received 2017 Aug 15; Accepted 2017 Nov 17; Collection date 2017.

Keywords: quantitative psychology, measurement, psychometrics, computational psychometrics, mathematical psychology

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

Measurements in psychology always have been a significant challenge. Research in quantitative psychology has developed several methods and techniques to improve our understanding of humans. Over the last few decades, the rapid advancement of technology had led to more extensive study of human cognition, including both the emotional and behavioral aspects. Psychometric methods have integrated very advanced mathematical and statistical techniques into the analyses, and in our Frontiers Specialty (Quantitative Psychology and Measurement), we have stressed the methodological dimension of the best practice in psychology. The long tradition of using self-reported questionnaires is still of high interest, but it is not enough in the twenty-first century.

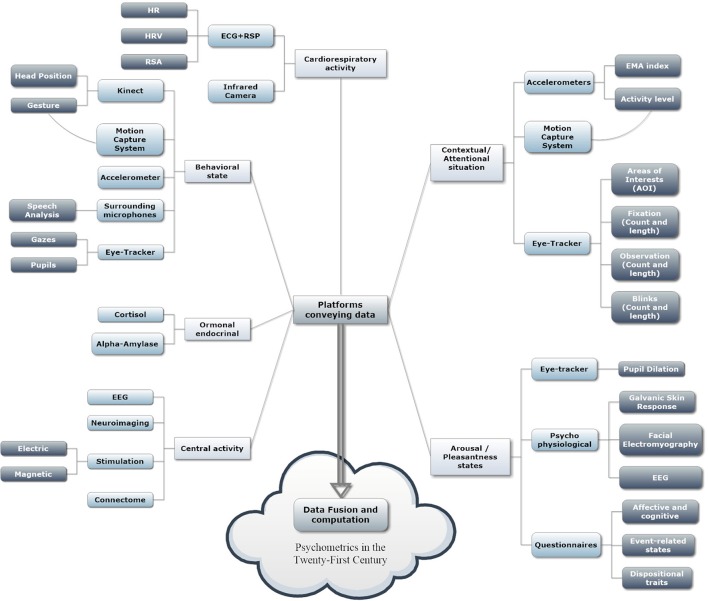

We stress the use of innovative methods and technologies as psychometric tools. One of the most significant challenges in quantitative psychology and measurement concerns the integration of technologies and computational techniques into current standards.

In the following, our aim is to show how data collection can involve human behavior, internal states and the manipulation of experimental settings. In particular, we define typical psychophysiological measures for a deeper understanding of internal states—analyzing the central and peripheral nervous system, hormonal factors in the endocrine system and the fascinating field of gene transcription in human neuroscience. These factors represent the measurement of the “internal” sphere that is becoming so interesting for measurement in all the field of psychology, including social and affective science, not only in the cognitive sciences. The idea to read internal states has always been very clear in clinical and experimental psychology, but now is becoming even more widespread. This is thanks to the improvements in technologies and lower costs.

Next, we highlight the measurement of the exhibited behavior patterns representing the “external” sphere of human thinking through expressed behavior. Again, technology is a critical aspect shedding new light on the field. The use of low-cost and high-end technologies for understanding verbal and nonverbal patterns is helping to identify innovative ways to measure the psychological factors leading to a behavior. They can be considered a new challenge of behavioral science, e.g., the use of commercial devices (such as the Kinect) in motor and cognitive neurorehabilitation. Linked to psychophysiology and exhibited behavior patterns, virtual reality is becoming a cutting-edge tool for experimental manipulation, building personalized experimental settings, but found in a laboratory.

We define and highlight the use of virtual reality in psychology as an incredible low-cost tool collecting data and creating realistic situations that can be used for clinical, experimental, social settings among others, and so of keen interest in several psychology fields.

In conclusion, we present new methods and techniques already used in other fields, but incredibly expanding also in psychology and psychometrics. Computational science, complex networks, and simulations, are highlighted as the promising new methods for the best convergence of psychological science and technologies. These have ability to create innovative tools for better comprehension and a quantitative measurement in psychology.

Psychophysiology: nervous system, endocrine system, and gene transcription

The use of biosensors in human research has become a reliable method for a quantitative and objective measurement of participants' at psychological, behavioral, and physiological level. The use of biosensors, specifically psychophysiology, is not an alternative to self-reports, but they can be considered as a great asset in our effort to integrate additional information to enhance our understanding of specific patterns.

The advantage of psychophysiology is the possibility of recording internal states during an experience (Mauri et al., 2010 ; Blascovich, 2014 ; Kreibig et al., 2015 ; McGaugh, 2016 ). This means that the researcher can evaluate the impact of a specific experience without interrupting the experience to ask the user her/his opinion.

In the valence-arousal model (Russell, 1979 ; Lang, 1995 ) researchers are interested in the identification of affective states of subjects during experimental sessions. There exists two “activation” dimensions mainly investigated, namely the physiological arousal and emotional valence. The Arousal-Pleasantness plan is drown in psychophysiology as a robust measurement of the affective states. Physiological arousal is a measure of the sympathetic branch of the autonomic nervous system (ANS). Sympathetic activation generates an increase in the activity of sudoriparous glands (also known as Sweat glands) that it is possible to measure trough Electrodermal activity (EDA) computed as galvanic skin response (GSR), or also as skin conductance resistance (SCR). EDA is a direct measure of sympathetic activation with no intervention of the parasympathetic branch. Other good measures of physiological arousal that are affected by the two branches of ANS are skin temperature, heart rate, respiration rate, and pupil dilation. To measure emotional valance is more complex, and researchers generally used facial expression for identifying this dimension. At psychophysiological level facial expressions have been tested through the use of superficial electromyography (sEMG) a noninvasive way to quantify the muscle activation. In particular activations of the zygomatic major and corrugator supercilii facial muscles are known as the best indicators of emotional valence. We will have a positive valence with increased activity of the zygomatic major muscle, and a negative valence with a higher activation of corrugator supercilii facial muscle (Blumenthal et al., 2005 ). Since emotional valence identify positive or negative direction we might be interested in a more direct measure of emotional intensity, and the best index to this purpose is the pupil dilation (Mauri et al., 2010 ).

Respiratory activity can be recorded to identify both the voluntary and the autonomic respiration activity on cardiovascular activity, that can also be recorded. In particular respiration (RSP) can be recorded through respiratory inductance plethysmography (RIP) with thoracic and abdominal strips. On the other hand cardiac activity can be recorded through an electrocardiogram (ECG), and in particular identifying the R peak in the ECG waveform (with the conventional PQRSTU peaks). The oscillations in R to R peaks in the ECG waveform (also known as NN intervals to emphasize normal beats) provide several information on sympathovagal activations and can be used to compute heart rate variability (HRV) indexes in the temporal and spectral domain. According to the guidelines of the Task Force of the European Society of Cardiology and the North American Society of Pacing and Electrophysiology, temporal, spectral and nonlinear indexes of HRV, can be considered as a robust way to identify responses in the ANS (Malik, 1996 ).

Electroencephalographic (EEG) analyses of both the classic spectral bands (e.g., alpha, beta, delta, gamma, …) and the related potential (ERP) that is evoked also have been used extensively in psychophysiological research (Thompson, 2016 ). More in general the data collection related to the brain is one of the huge field in neuroscience and psychology. In particular neuroimaging techniques received a lot of attention in psychological science and are able to provide a wide spectra of information related to the human thinking related to cognitive, affective and relational aspects (Cipresso et al., 2012b ). Moreover, the improvement in the quality of the used methods to automatize the analysis of brain imaging results, provided new access to important information of structural brain and the related connections. This has been made possible by using deep learning and computational techniques, but also integrating different kinds of methods, such as EEG in the scanner addressing both spatial and temporal precision, and the interesting development of PET and fMRI, including spectroscopy, diffusion, connectome and the other challenges that neuroimaging and neuroscience methods are able to provide.

By using biosensors to record peripheral and central nervous activity, we can obtain an incredibly huge amount of data that can be analyzed for a deeper understanding of internal states during experimental tasks in psychological studies. In any case there are also several problems with the use of biosensors, in particular the obtrusiveness needs to be considered. Even if the goal is to not interfere with the experiment, this cannot be avoided and in a way or another we finish to affect behavior by measuring them. In most of the behavioral experiments, i.e., research related to emotions, affective states, and cognitive assessment, conditioning the participants is an important part that can risk to affect the validity and the reliability of a study.

This is a well-known problem in all the fields of research and there are different ways to deal with this issue. First, more precisely, less-intrusive biosensors have been developed. Also, there have been significant advancements in the development of integrated biosensors with emphasis to avoid any discomfort, building wearable biosensors without patches or cables.

Human experiments necessarily involve technologies used to read the impact of an experience goal in the study. However, the use of these technologies is not transparent to the participants and the more we want to know, the more will be investigated with sensors that will be evident in the subject. We need to seriously wonder if there is a way to have contactless biosensors, able to collect data which remains invisible to the data generator, that is a human being. In physics, scientists need to face the same problem which is well-known as the “observer effect,” where a physical measurement of a system is possible only influencing the system itself. In biobehavioral sciences the observer effect is even worse (Lewandowski et al., 2016 ). In consciousness research, the observer effect can be circumvented through “no-report” paradigms, where the idea is precisely to avoid asking people to report on their experiences to avoid the observer effect.

Interestingly, a foundational part of psychology and psychometrics consists not only of observing one's experiences, but also to think about them and report them. This appears to be a keen question where we are called to provide feedback as researchers in the field. Pervasiveness and ubiquitous computing could be a field that could provide some solutions by using biosensors integrated in technology—so personal as to be as invisible to individuals.

Considering smartphones and the data collected by the integrated sensors (such us the interconnected heart rate watches), we can understand that data collection can be transparent to everyone. In a big data world, it probably is easier to infer information from existing devices than collecting new data. On the other hand, this requires a change in knowledge and methods, i.e., to change from laboratory experimental-driven designs to field data-driven analyses. Even if this scenario is possible, it is not the solution to each measurement problems, but still a useful integration and direction to pursue the best way for a better understanding.

Psychophysiology addresses both the activities of the nervous system and the endocrine system, i.e., the collection of glands in people that secrete hormones directly into the circulatory system to reach distant target organs. Concentrations of hormones, patterns of released hormones, and the numbers and locations of the receptors of the hormones are related significantly with human behavior at the cognitive, emotional, and relational levels. Moreover, the efficiency of hormone receptors is involved in gene transcription and vice versa. In particular, hormones influence human behavior, which in turn can influence hormones, and the cycle continues. Thus, the endocrine system also must be investigated as an important measurement in psychology. For example, several studies about psychological stress demonstrated that prolonged stress causes the release of glucocorticoid (Lazzarino et al., 2013 ; Cattaneo and Riva, 2016 ). This release is controlled by the hypothalamus (such as the sympathetic nervous system, which is activated by acute, time-limited stressors), but, in this case, the control is endocrinal. In fact, the hypothalamus, with a release factor [corticotropin-releasing hormone (CRH)], induces the pituitary gland to release adrenocorticotropic hormone (ACTH), also known as corticotropin, that targets the adrenal glands (also known as suprarenal glands) (Popoli et al., 2012 ; Fries et al., 2016 ). This process, referred to as the hypothalamic–pituitary–adrenal axis (HPA axis or HTPA axis), is a major neuroendocrine system that manages stress reaction regulating several body functions (among which, digestion, emotions, and sexuality) (Dickerson and Kemeny, 2004 ). The most important glucocorticoid is cortisol, which is indeed considered to provide an objective measure of chronic stress. The metabolic effect of cortisol is slower than the effect of adrenaline, but it lasts longer (Singh et al., 1999 ). The release of cortisol has several effects on an organism, such as increasing the serum glucose with gluconeogenesis, increasing the metabolism of fat, and suppressing the immune system (to save energy). In addition, it shows negative effects on some cognitive processes, such as memory and attention (McEwen and Sapolsky, 1995 ) by affecting the hippocampus, which mediates the cortisol-induced feedback inhibition of the HPA axis. Cortisol also can produce the death of neural cells in the frontal lobe as well as producing detrimental consequences on the cardiovascular apparatus (Ockenfels et al., 1995 ; Miller et al., 2007 ).

Analysis of exhibited behavior patterns

Gomez-Marin and colleagues (Gomez-Marin et al., 2014 ; Gomez-Marin and Mainen, 2016 ) defined animal behavior as “the macroscopic expression of neural activity, implemented by muscular and glandular contractions acting on the body, and resulting in egocentric and allocentric changes in an organized temporal sequence” (p. 1456). Behavior is relational, dynamic and multi-dimensional, and to measure it, in any analysis, we must consider all of these aspects.

Normally, psychological researchers are interested in self-reported behaviors during experiments, but this raises an important question: Do people behave coherently with respect to what they self-report? This problem probably is more significant than measurement, since it can affect the validity of all of our research. Technologies can be great instruments in psychological investigations by reducing the gap between the individuals' behaviors and their opinions of their behaviors. For example, a test subject could make every effort not be stressed, but the subject actually could be more stressed than he/she thinks and/or more stressed than the general population (i.e., a normative sample). If psychophysiology can be used to understand internal states, behavioral patterns can be used to understand exhibited behaviors (Cipresso, 2015 ; Krakauer et al., 2017 ). Exhibited behaviors can also differ from what we expect. For example, in the famous Nisbett and Wilson experiment of 1977 (Nisbett and Wilson, 1977 ), a group of participants, hearing a continuous unsettling noise while watching a movie, declared to have enjoyed the experience less than others who didn't have that distraction. Nisbett and Wilson showed that the expressed pleasantness levels were the same for the group with the noise and the other group without the noise. This is surprisingly true in psychological experiments and needs to be considered when self-reported measures are quantified. The lesson learned is that exhibited behaviors are not only the expressed behavior, and this needs to be taken into account in behavioral research.

In particular, activity-related behavior suggests an action regulation that is clearly continuous and observable and can be identified in expressions, contours and other qualities, including vocal tonality. Moreover, gesture and posture are important cues of human communication and part of non-verbal behavior representing internal states, even if “unsaid.” On the other hand, verbal behavior could be interpreted to indicate the “said” elements (Nisbett and Wilson, 1977 ; Giakoumis et al., 2012 ; Gomez-Marin et al., 2014 ).

Technologies to detect exhibited behavior also are now available in low-cost devices, such as Microsoft Kinect, which was built for gaming but has been used extensively in behavioral research. High-end technologies also are used for the analysis of behaviors; for example, they are used for path analysis for neurological patients and in motion-capture systems. Other technologies that have been used extensively in behavioral research are based on body movements (such as Kinect or Nintendo Wii) and eye movements (by using commercial and high-end eye-trackers) (Cipresso et al., 2013 ). More recently, accelerometers and gyroscopes have been used as laboratory devices or in mobile devices we use every day (such as smartphones). Smartphones have become an important tool for researchers who are interested in understanding behavior during daily activities and in the field (out of the laboratory) (Cipresso et al., 2012a ; Gaggioli et al., 2013 ). The complexity of sensors included in current smartphones allows us to know an individual's position (with GPS), phone calls made (and received), physical activity and many other exhibited behaviors. Also, smartphones make it convenient for people to self-report their activities during specific daily contexts.

Other classic technologies used to attain people's exhibited behaviors are audio and video devices, which are used for both qualitative studies and quantitative analysis. Some of these devices can be very sophisticated, providing speech analysis and video analysis for information retrieval based on artificial intelligence (Camastra and Vinciarelli, 2008 ).

Virtual reality

One of the best ways to experience situations in a controlled environment, such as in a laboratory, is definitively Virtual Reality (VR). Thanks to VR, researchers are able to understand and measure several cognitive and emotional states and traits, and even personality traits (Heim, 1993 ; Ryan, 2001 ; Sherman and Craig, 2002 ; Cipresso and Serino, 2014 ; Villani et al., 2016 ; Kane and Parsons, 2017 ). From a technological perspective, VR requires a standard commercial PC capable of 3D visualization, a head-mounted display (HMD) endowed with position trackers and a game controller, such as a joypad (Riva et al., 2015 ). The image changes in real time thanks to the information that the tracker through the computer records from the position and orientation of the users' HMD in the subjects' head.

Psychologists usually describe VR as “an advanced form of human-computer interface that allows the user to interact with and become immersed in a computer-generated environment in a naturalistic fashion” (Schultheis and Rizzo, 2001 ). In general, the sense of presence , i.e., the feeling of being “inside” the simulated world, is the key element of VR as a communication device (Riva et al., 2014 , 2015 ). In the same way that individuals are consciously “being there,” the feeling of presence in a technologically mediated environment provides a very similar experience; i.e., subjects are not “outside” the synthetic experience, but are “inside” it. In other words, VR provides an experience to be lived as if in a real place, at the same time allowing experimental control in a lab setting with a totally manipulated environment (Riva et al., 2015 ).

In the past, the use of VR was limited by the expensive cost of the hardware device and software licenses. Over the last few years, the huge market of different head-mounted displays (HMDs), such as Oculus, HTC VIVE, OSVR, Gear, and others, has made it possible to have a bundle of a PC and VR system, including HMD, joypad and other input devices, for < 3,000 Euro (Brooks, 1999 ; Riva et al., 2003 ; Riva and Waterworth, 2014 ). However, unfortunately, the cost of the software is still problematic, not for the licenses, but for the personnel costs for making an integrated VR requiring code knowledge that psychologists are not prone to learn. In this effort, Riva and colleagues tried in the last decades to create open access and free solutions for creating virtual environments without the need of any code (Riva et al., 2011 ; Cipresso and Riva, 2016 ).

In the ‘90s, cybertherapy and virtual rehabilitation were considered interesting fields of research with several challenges and problems to solve (Lamson, 1994 ; North et al., 1996 ; Rothbaum et al., 1997 ). However, several clinical, controlled trials demonstrated the efficacy of cybertherapy (Riva, 2003 , 2005 ; Holden, 2005 ; Malloy and Milling, 2010 ; McCann et al., 2014 ). The contemporary reducing of the costs of HMD led to a new era of cybertherapy and virtual rehabilitation, making the field of interest of assessment and quantitative measurement also in the clinical field.

Since VR is a computer-based program, it can track everything. This is a great benefit for quantitative psychology and measurement since the researcher is able to have precise, per-millisecond data for each event that occurs during the experience. Thus, VR can elicit behavior in a replicable setting and simultaneously is able to record data and computing indexes by keeping experimental conditions as well. The use of VR allows the building of complex settings within which researchers can manipulate and replicate to test realistic situations for the behavioral aspects, reported to be relational, dynamic and multi-dimensional by definition (Cipresso, 2015 ). VR also can be connected to external devices and, within virtual environments, it is possible to integrate devices, such as biosensors, making it possible to measure quantitatively the experience during navigation by using specific interconnected biosensors and internal logs that provide indications about each event (Cipresso, 2015 ).

By fusing data from biosensors and devices interconnected within the VR environments, it is possible to synchronize all these signals with the log of the VR events that the researcher has set to identify experimental conditions as well as unexpected occurrences, incidental findings and all of the behaviors one may wish to analyze. In this sense, VR can be considered a great way to collect quantitative data of people's actual behaviors during realistic situations in simulated environments.

Computational science, complex networks, and simulations

One of the most pervasive scientific paradigms in the twenty-first century has been complexity. From hard science to social science, the use of such a paradigm affected the evolution of how we think about given phenomena. The idea that very simple interacting elements are able to produce a complex structure is fascinating, but it also is useful, since it allows us to explain complex phenomena as having emerged from the interactions of simple elements that can be understood and analyzed (Miller and Page, 2009 ).

The bottom-up approach exploits complexity, opens new ways to study psychological constructs, and provides new tools to answer old questions. Indeed, psychology historically experienced and contributed to the diffusion of complexity, with superb contributions in artificial intelligence, complex networks, psychophysics, and, in general, by using the theoretical and pragmatic level models, methods, and concepts that still are part of complex science (Myung, 2000 ; Friedenberg, 2009 ; Guastello et al., 2009 ).

The increased computational capacity that is currently available provides a new approach to quantitative psychology, and, more generally for measurements, to think well beyond just the new way to analyze data. For example, network complexity is used extensively to analyze relational data, but it also is used to create new ways of thinking in psychology, such as the network theory of mental disorders (Fried and Cramer, 2016 ; Jones et al., 2017 ). In addition, computational technologies are providing new ways to create psychological platforms for the assessment of patients in clinical settings as well as their rehabilitation. The use of mobile applications, virtual reality, and psychophysiology for psychological science is also becoming even more computationally oriented by also integrating classification, automatic recognition, and machine-learning algorithms for measurements, as well as for use as a new way to treat mental disorders (Villani et al., 2011 , 2016 ; Michalski et al., 2013 ; Serino et al., 2013 ; Cipresso et al., 2017 ).

From this perspective, pervasiveness and unobtrusiveness are the keys for the integration of computational technologies and methods in psychological tools.

Toward the challenges of the twenty-second century

Considering the current development of psychometric tools for quantitative psychology and measurement, we posit that the first two decades of the twenty-first century highlighted a future in which human-computer confluence was possible at the methodological and practical levels (Figure 1 ). It seems clear that neuropsychological assessment and psychological evaluation will be based on technologies and computational methods, but we can expect more than this for the future. The pervasiveness of low-cost and high-end technologies is exploding, and we can expect that, in the next few decades, they will be integrated further into our daily lives and into objects (e.g., the Internet of Things, IoT). They will be so unobtrusive as to be invisible to the users, e.g., contactless biosensors that can record physiological signals without any patches or sensors on the body (acting from a distance or with conductive objects, such as a chair that record the patient's ECG from her or his back or the use of a mouse to determine the conductance level of human skin) (Cipresso et al., 2013 ; Cipresso, 2015 ).

Data fusion and computation from the available sources.

Further, we can expect the use of many other technologies and methods. For example, we cannot exclude a “personalized psychology,” such as the well-known “personalized medicine,” to use genetic information for the understanding of functional and dysfunctional behavior.

In any case, the use of new technologies and new methods can only be driven by new psychologists, in particular new psychometricians who rely on the actual knowledge of psychological science as it is at the moment, but also can build new ways of thinking about psychological settings, experiments, studies, and, above all, interventions. These capabilities will provide a deeper understanding of human behavior and lead to improvements in the well-being of humankind.

Author contributions

PC and JI conceived the idea. PC wrote the manuscript. PC and JI revised the manuscript and approved the final version.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

- Blascovich J. (2014). Using physiological indexes in social psychological research, in Handbook of Research Methods in Social and Personality Psychology, eds Reis H. T., Judd C. M. (Cambridge, UK: Cambridge University Press; ), 101–122. [ Google Scholar ]

- Blumenthal T. D., Cuthbert B. N., Filion D. L., Hackley S., Lipp O. V., van Boxtel A. (2005). Committee report: guidelines for human startle eyeblink electromyographic studies. Psychophysiology 42, 1–15. 10.1111/j.1469-8986.2005.00271.x [ DOI ] [ PubMed ] [ Google Scholar ]

- Brooks F. P., Jr. (1999). What's real about virtual reality? Comput. Graphics Appl. IEEE 19, 16–27. 10.1109/38.799723 [ DOI ] [ Google Scholar ]

- Camastra F., Vinciarelli A. (eds.). (2008). Machine learning for audio, image and video analysis, in Advanced Information and Knowledge Processing (Berlin; Heidelberg: Springer; ), 83–89. [ Google Scholar ]

- Cattaneo A., Riva M. (2016). Stress-induced mechanisms in mental illness: a role for glucocorticoid signalling. J. Steroid Biochem. Mol. Biol. 160, 169–174. 10.1016/j.jsbmb.2015.07.021 [ DOI ] [ PubMed ] [ Google Scholar ]

- Cipresso P. (2015). Modeling behavior dynamics using computational psychometrics within virtual worlds. Front. Psychol. 6:1725. 10.3389/fpsyg.2015.01725 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Cipresso P., Gaggioli A., Serino S., Pallavicini F., Raspelli S., Grassi A., et al. (2012b). EEG alpha asymmetry in virtual environments for the assessment of stress-related disorders. Stud. Health Technol. Inform. 173, 102–104. 10.3233/978-1-61499-022-2-102 [ DOI ] [ PubMed ] [ Google Scholar ]

- Cipresso P., Matic A., Lopez G., Serino S. (2017). Computational paradigms for mental health. Comput. Math. Methods Med. 2017:5607631. 10.1155/2017/5607631 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Cipresso P., Riva G. (2016). Personality assessment in ecological settings by means of virtual reality, in The Wiley Handbook of Personality Assessment, ed Kumar U. (Hoboken, NJ: John Wiley & Sons; ), 240–248. [ Google Scholar ]

- Cipresso P., Serino S. (2014). Virtual Reality: Technologies, Medical Applications and Challenges. Hauppauge, NY: Nova Science Publishers. [ Google Scholar ]

- Cipresso P., Serino S., Gaggioli A., Albani G., Riva G. (2013). Contactless bio-behavioral technologies for virtual reality. Ann. Rev. Cyberther. Telemed. 191, 149–153. 10.3233/978-1-61499-282-0-149 [ DOI ] [ PubMed ] [ Google Scholar ]

- Cipresso P., Serino S., Villani D., Repetto C., Sellitti L., Albani G., et al. (2012a). Is your phone so smart to affect your state? an exploratory study based on psychophysiological measures. Neurocomputing 84, 23–30. 10.1016/j.neucom.2011.12.027 [ DOI ] [ Google Scholar ]

- Dickerson S. S., Kemeny M. E. (2004). Acute stressors and cortisol responses: a theoretical integration and synthesis of laboratory research. Psychol. Bull. 130, 355–391. 10.1037/0033-2909.130.3.355 [ DOI ] [ PubMed ] [ Google Scholar ]

- Fried E. I., Cramer A. O. (2016). Moving forward: challenges and directions for psychopathological network theory and methodology. Perspect. Psychol. Sci. 12, 999–1020. 10.1177/1745691617705892 [ DOI ] [ PubMed ] [ Google Scholar ]

- Friedenberg J. (2009). Dynamical Psychology: Complexity, Self-Organization and Mind. Marblehead, MA: ISCE Publishing. [ Google Scholar ]

- Gaggioli A., Pioggia G., Tartarisco G., Baldus G., Corda D., Cipresso P., et al. (2013). A mobile data collection platform for mental health research. Pers. Ubiquitous Comput. 17, 241–251. 10.1007/s00779-011-0465-2 [ DOI ] [ Google Scholar ]

- Giakoumis D., Drosou A., Cipresso P., Tzovaras D., Hassapis G., Gaggioli A., et al. (2012). Using activity-related behavioural features towards more effective automatic stress detection. PLoS ONE 7:e43571. 10.1371/journal.pone.0043571 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Gomez-Marin A., Mainen Z. F. (2016). Expanding perspectives on cognition in humans, animals, and machines. Curr. Opin. Neurobiol. 37, 85–91. 10.1016/j.conb.2016.01.011 [ DOI ] [ PubMed ] [ Google Scholar ]

- Gomez-Marin A., Paton J. J., Kampff A. R., Costa R. M., Mainen Z. F. (2014). Big behavioral data: psychology, ethology and the foundations of neuroscience. Nat. Neurosci. 17, 1455–1462. 10.1038/nn.3812 [ DOI ] [ PubMed ] [ Google Scholar ]

- Guastello S. J., Koopmans M., Pincus D. (2009). Chaos and Complexity in Psychology. Cambridge: Cambridge University. [ Google Scholar ]

- Heim M. (1993). The Metaphysics of Virtual Reality. Oxford, UK: Oxford University Press. [ Google Scholar ]

- Holden M. K. (2005). Virtual environments for motor rehabilitation: review. Cyberpsychol. Behav. 8, 187–211. 10.1089/cpb.2005.8.187 [ DOI ] [ PubMed ] [ Google Scholar ]

- Jones P. J., Heeren A., McNally R. J. (2017). Commentary: a network theory of mental disorders. Front. Psychol. 8:1305. 10.3389/fpsyg.2017.01305 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Kane R. L., Parsons T. D. (2017). The Role of Technology in Clinical Neuropsychology. Oxford, UK: Oxford University Press. [ Google Scholar ]

- Krakauer J. W., Ghazanfar A. A., Gomez-Marin A., MacIver M. A., Poeppel D. (2017). Neuroscience needs behavior: correcting a reductionist bias. Neuron 93, 480–490. 10.1016/j.neuron.2016.12.041 [ DOI ] [ PubMed ] [ Google Scholar ]

- Kreibig S. D., Samson A. C., Gross J. J. (2015). The psychophysiology of mixed emotional states: internal and external replicability analysis of a direct replication study. Psychophysiology 52, 873–886. 10.1111/psyp.12425 [ DOI ] [ PubMed ] [ Google Scholar ]

- Lamson R. (1994). Virtual therapy of anxiety disorders. CyberEdge J. 4, 1–28. [ Google Scholar ]

- Lang P. J. (1995). The emotion probe - studies of motivation and attention. Am. Psychol. 50, 372–385. 10.1037/0003-066X.50.5.372 [ DOI ] [ PubMed ] [ Google Scholar ]

- Lazzarino A. I., Hamer M., Gaze D., Collinson P., Steptoe A. (2013). The association between cortisol response to mental stress and high-sensitivity cardiac troponin T plasma concentration in healthy adults. J. Am. Coll. Cardiol. 62, 1694–1701. 10.1016/j.jacc.2013.05.070 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Lewandowski A., Baker W. J., Sewick B., Knippa J., Axelrod B., McCaffrey R. J. (2016). Policy statement of the American board of professional neuropsychology regarding third party observation and the recording of psychological test administration in neuropsychological evaluations. Appl. Neuropsychol. Adult. 23, 391–398. 10.1080/23279095.2016.1176366 [ DOI ] [ PubMed ] [ Google Scholar ]

- Malik M. (1996). Task force of the European society of cardiology and the north American society of pacing and electrophysiology. Heart rate variability. standards of measurement, physiological interpretation, and clinical use. Eur. Heart. J. 17, 354–381. 10.1093/oxfordjournals.eurheartj.a014868 [ DOI ] [ PubMed ] [ Google Scholar ]

- Malloy K. M., Milling L. S. (2010). The effectiveness of virtual reality distraction for pain reduction: a systematic review. Clin. Psychol. Rev. 30, 1011–1018. 10.1016/j.cpr.2010.07.001 [ DOI ] [ PubMed ] [ Google Scholar ]

- Mauri M., Magagnin V., Cipresso P., Mainardi L., Brown E. N., Cerutti S., et al. (2010). Psychophysiological signals associated with affective states, Paper presented at the Engineering in Medicine and Biology Society (EMBC), 2010 Annual International Conference of the IEEE (Buenos Aires: ). [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- McCann R. A., Armstrong C. M., Skopp N. A., Edwards-Stewart A., Smolenski D. J., June J. D., et al. (2014). Virtual reality exposure therapy for the treatment of anxiety disorders: an evaluation of research quality. J. Anxiety Disord. 28, 625–631. 10.1016/j.janxdis.2014.05.010 [ DOI ] [ PubMed ] [ Google Scholar ]

- McEwen B. S., Sapolsky R. M. (1995). Stress and cognitive function. Curr. Opin. Neurobiol. 5, 205–216. 10.1016/0959-4388(95)80028-X [ DOI ] [ PubMed ] [ Google Scholar ]

- McGaugh J. L. (2016). Emotions and Bodily Responses: A Psychophysiological Approach. Cambridge, MA: Academic Press. [ Google Scholar ]

- Michalski R. S., Carbonell J. G., Mitchell T. M. (2013). Machine Learning: An Artificial Intelligence Approach. Berlin; Heidelberg: Springer Science & Business Media. [ Google Scholar ]

- Miller G. E., Chen E., Zhou E. S. (2007). If it goes up, must it come down? chronic stress and the hypothalamic-pituitary-adrenocortical axis in humans. Psychol. Bull. 133, 25–45. 10.1037/0033-2909.133.1.25 [ DOI ] [ PubMed ] [ Google Scholar ]

- Miller J. H., Page S. E. (2009). Complex Adaptive Systems: An Introduction to Computational Models of Social Life. Princeton, NJ: Princeton University Press. [ Google Scholar ]

- Myung I. J. (2000). The importance of complexity in model selection. J. Math. Psychol. 44, 190–204. 10.1006/jmps.1999.1283 [ DOI ] [ PubMed ] [ Google Scholar ]

- Nisbett R. E., Wilson T. D. (1977). Telling more than we can know: verbal reports on mental processes. Psychol. Rev. 84:231 10.1037/0033-295X.84.3.231 [ DOI ] [ Google Scholar ]

- North M. M., North S., Coble J. (1996). Virtual Reality Therapy. Ann Arbor, MI: IPI Press. [ Google Scholar ]

- Ockenfels M. C., Porter L., Smyth J., Kirschbaum C., Hellhammer D. H., Stone A. A. (1995). Effect of chronic stress associated with unemployment on salivary cortisol: overall cortisol levels, diurnal rhythm, and acute stress reactivity. Psychosom. Med. 57, 460–467. 10.1097/00006842-199509000-00008 [ DOI ] [ PubMed ] [ Google Scholar ]

- Popoli M., Yan Z., McEwen B. S., Sanacora G. (2012). The stressed synapse: the impact of stress and glucocorticoids on glutamate transmission. Nat. Rev. Neurosci. 13, 22–37. 10.1038/nrn3138 [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Fries G. R., Gassen N. C., Schmidt U., Rein T. (2016). The FKBP51-glucocorticoid receptor balance in stress-related mental disorders. Curr. Mol. Pharmacol. 9, 126–140. 10.2174/1874467208666150519114435 [ DOI ] [ PubMed ] [ Google Scholar ]

- Riva G. (2003). Applications of virtual environments in medicine. Methods Inf. Med. 42, 524–534. [ PubMed ] [ Google Scholar ]

- Riva G. (2005). Virtual reality in psychotherapy: review. Cyberpsychol. Behav. 8, 220–230. 10.1089/cpb.2005.8.220 [ DOI ] [ PubMed ] [ Google Scholar ]

- Riva G., Davide F., IJsselsteijn W. A. (2003). Being There: Concepts, Effects and Measurements of User Presence in Synthetic Environments. Amsterdam: IOS Press. [ Google Scholar ]

- Riva G., Gaggioli A., Grassi A., Raspelli S., Cipresso P., Pallavicini F., et al. (2011). NeuroVR 2–a free virtual reality platform for the assessment and treatment in behavioral health care. Stud. Health Technol. Inform. 163, 493–495. 10.3233/978-1-60750-706-2-493 [ DOI ] [ PubMed ] [ Google Scholar ]

- Riva G., Mantovani F., Waterworth E. L., Waterworth J. A. (2015). Intention, Action, Self and Other: An Evolutionary Model of Presence Immersed in Media. Berlin; Heidelberg: Springer. [ Google Scholar ]

- Riva G., Waterworth J. (2014). Being present in a virtual world, in Oxford Handb. Virtual, ed Grimshaw M. (Oxford: Oxford University Press; ), 205. [ Google Scholar ]

- Riva G., Waterworth J., Murray D. (2014). Interacting with Presence: HCI and the Sense of Presence in Computer-Mediated Environments. Berlin: Walter de Gruyter GmbH. [ Google Scholar ]

- Rothbaum B. O., Hodges L., Kooper R. (1997). Virtual reality exposure therapy. J. Psychother. Pract. Res. 6, 219–226. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- Russell J. A. (1979). Affective space is bipolar. J. Pers. Soc. Psychol. 37, 345–356. 10.1037/0022-3514.37.3.345 [ DOI ] [ Google Scholar ]

- Ryan M.-L. (2001). Narrative as Virtual Reality. Londres: Parallax. [ Google Scholar ]

- Schultheis M. T., Rizzo A. A. (2001). The application of virtual reality technology in rehabilitation. Rehabil. Psychol. 46:296 10.1037/0090-5550.46.3.296 [ DOI ] [ Google Scholar ]

- Serino S., Cipresso P., Gaggioli A., Riva G. (2013). The Potential of Pervasive Sensors and Computing for Positive Technology: the Interreality Paradigm Pervasive and Mobile Sensing and Computing for Healthcare. Berlin; Heidelberg: Springer. [ Google Scholar ]

- Sherman W. R., Craig A. B. (2002). Understanding Virtual Reality: Interface, Application, and Design. Amsterdam: Elsevier. [ Google Scholar ]

- Singh A., Petrides J. S., Gold P. W., Chrousos G. P., Deuster P. A. (1999). Differential hypothalamic-pituitary-adrenal axis reactivity to psychological and physical stress 1. J. Clin. Endocrinol. Metab. 84, 1944–1948. 10.1210/jc.84.6.1944 [ DOI ] [ PubMed ] [ Google Scholar ]

- Thompson T. P. (2016). EEG in Depth: The Intersection of Electroencephalography and Depth Psychology. Carpinteria, CA: Pacifica Graduate Institute. [ Google Scholar ]

- Villani D., Cipresso P., Gaggioli A., Riva G. (Eds). (2016). Int Integrating Technology in Positive Psychology Practice. Hershey, PA: IGI Global. [ Google Scholar ]

- Villani D., Grassi A., Cognetta C., Cipresso P., Toniolo D., Riva G. (2011). The effects of a mobile stress management protocol on nurses working with cancer patients: a preliminary controlled study. Stud. Health Technol. Inform. 173, 524–528. 10.3233/978-1-61499-022-2-524 [ DOI ] [ PubMed ] [ Google Scholar ]

- View on publisher site

- PDF (723.4 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

HYPOTHESIS AND THEORY article

Quantitative methods in psychology: inevitable and useless.

- Institute of Psychology, Tallinn University, Tallinn, Estonia

Science begins with the question, what do I want to know? Science becomes science, however, only when this question is justified and the appropriate methodology is chosen for answering the research question. Research question should precede the other questions; methods should be chosen according to the research question and not vice versa. Modern quantitative psychology has accepted method as primary; research questions are adjusted to the methods. For understanding thinking in modern quantitative psychology, two epistemologies should be distinguished: structural-systemic that is based on Aristotelian thinking, and associative-quantitative that is based on Cartesian–Humean thinking. The first aims at understanding the structure that underlies the studied processes; the second looks for identification of cause–effect relationships between the events with no possible access to the understanding of the structures that underlie the processes. Quantitative methodology in particular as well as mathematical psychology in general, is useless for answering questions about structures and processes that underlie observed behaviors. Nevertheless, quantitative science is almost inevitable in a situation where the systemic-structural basis of behavior is not well understood; all sorts of applied decisions can be made on the basis of quantitative studies. In order to proceed, psychology should study structures; methodologically, constructive experiments should be added to observations and analytic experiments.

Science begins with questions. Everybody can have questions, and even answers to them. What makes science special is its method of answering questions. Therefore a scientist must ask questions both about the phenomenon to be understood and about the method. There are actually not one or two but four principal questions that should be asked by every scientist when conducting studies ( Toomela, 2010b ):

1. What do I want to know, what is my research question?

2. Why I want to have an answer to this question?

3. With what specific research procedures (methodology in the strict sense of the term) can I answer my question?

4. Are the answers to three first questions complementary, do they make a coherent theoretically justified whole?

First, there should be a question about some phenomenon that needs an answer. Next, the need for an answer should be justified – in science it is quite possible to ask “wrong” questions, which answers do not help understanding the studied phenomena. Vygotsky (1982a) gave in his colorful language an ironic example of answering scientifically wrong questions:

One can multiply the number of citizens of Paraguay with the number of versts [an obsolete Russian unit of length] from Earth to Sun and divide the result with the average length of life of an elephant and conduct this whole operation without a flaw even in one number; and yet the number found in the operation can confuse anybody who would like to know the national income of that country

(p. 326; my translation).

It can be said that modern psychology is more advanced than science of Vygotsky’s time; perhaps the questions asked in the modern science are meaningful. This opinion, however, may be wrong. One source of wrong questions about the studied phenomena is unsatisfactory answer to the last question – when answers to first three questions do not agree one with another. In this paper I am going to suggest that psychology asks “wrong” questions far too often. The problem is related to the mismatch in answers to the first and third question. Specifically, quantitative methodology that dominates psychology of today is not appropriate for achieving understanding of mental phenomena, psyche.

The number of substantial problems with quantitative methods brought out by scholars is increasing every year. Already one observation could make scientists cautious. The questions provided above are in a certain order – first we should have a question about the phenomenon and only then the appropriate method for finding an answer should be looked for. Substantial part of modern psychology follows the opposite order of decisions – first it is decided to use quantitative methods, and the question about the phenomenon is already formulated in the language of data analysis. Between 1940 and 1955, statistical data analysis became the indispensable tool for hypothesis testing; with this change of scientific methodology, statistical methods began to turn into theories of mind. Instead of looking for theory that perhaps can be elaborated with the help of statistical tools, statistical tools began to determine the shape of theories ( Gigerenzer, 1991 , 1993 ).

For instance, a researcher may ask, how many factors emerge in the analysis of personality or intelligence test results. But why to look for the number of factors if personality or intelligence is studied? We would guess here that the original question may be something like, is it possible to identify distinguishable components in the structure of personality or intelligence? However, the decision to use factor analysis for that purpose must be justified before this method is chosen. This justification seems to be missing; it is only a hypothesis – ungrounded hypothesis – that factor analysis is an appropriate tool for identifying distinct mental processes that underlie behavioral data (filling in a questionnaire is behavior). The problems emerge already with the determination of the number of factors to retain. There are formal and substantial criteria for that. Formal decisions are based on Kaiser’s criterion, Cattel’s scree test, Velicer’s Minimum Average Partial test, or Horn’s parallel analysis. There is no evidence that any of these criteria is actually suitable for distinguishing the number of distinct processes that underlie behavior. Researchers also decide the number of factors on the basis of comprehensibility – the solution which generates the most comprehensible factor structure is chosen. But this substantial criterion is always used after applying formal criteria; nobody starts from the possibility that all, say, 248 items of an inventory correspond to 248 distinct mental processes. The number of factors usually retained – from two to six or seven – seems to correspond to processing limitations of the researcher’s working memory rather than to true structure of the mind.

In this paper, epistemological issues that underlie quantitative methods used in psychology are discussed. I suggest that, regardless of the research area in psychology, mathematical procedures of any kind cannot answer questions about the structure of mind. The discussion focuses primarily on the statistical methodology as used in psychology today; yet there are fundamental problems inherent to other kinds of mathematical approaches as well. My intention is not to suggest that scientific studies of mind should reject mathematical approaches. Rather, it should be made clear, which questions can be answered with the help of mathematical methods and which cannot.

Which Questions Can and Which Cannot be Answered by the Statistical Data Analysis Procedures?

We should look for reasons to use statistical data analysis into the works of those, who introduced quantitative methodology into sciences in general and psychology in particular. Today, as a rule, users and developers of statistical data analysis procedures do not ask any more which questions can and which cannot be answered with the help of those procedures. Scholars who introduced mathematical procedures, however, made it clear, what kinds of answers they are looking for. We will see that these scholars would reject the questions answered by statistical procedures today for reasons that are largely ignored without any scientific reason by modern researchers. One of the most influential figures in introducing factor analysis into psychology was Thurstone. There are several ideas in his fundamental work The vectors of mind that are worthy of attention ( Thurstone, 1935 ). These ideas, in the most part, underlie the use of not only factor analysis but the use of all forms of covariation-based data analysis procedures.

What are the (Statistical) Causes of Relationships Between Variables?

Thurstone suggested that the object of factor analysis is to discover the mental faculties. It is interesting that for him factor analysis alone would have never been sufficient for proving that a new faculty has been discovered – he held a position that results of factor analysis must be supported by the experimental observations. In another work he found that in some fields of studies tests are used that are not tests at all – “They are only questionnaires in which the subject controls the answers completely. It would probably be very fruitful to explore the domain of temperament with experimental tests instead of questionnaires.” ( Thurstone, 1948 , p. 406). So, Thurstone would very likely reject the modern practice to study many psychological phenomena – personality, values, attitudes, mental states, etc. – with questionnaires alone as it is often done now. There seems to be no theory that would justify studies of the structure of mind only by questionnaires. Without a theory that links subjectively controlled patterns of answers to objective structure of mind the results of all such studies are not grounded. Thorough analysis of this issue is beyond the scope of the current paper.

We can ask, what was the general question Thurstone aimed at answering with the help of factor analysis? Thurstone was not asking how mental faculties operate; he was looking for identification of what he called abilities , i.e., traits (which are attributes of individuals) which are defined by what an individual can do ( Thurstone, 1935 , p. 48).

The same questions about identification of “abilities” underlie the use of not only factor analysis but other covariation-based statistical data-analysis procedures as well. Here it is feasible to go deeper into the roots of introducing quantitative data-analysis into sciences. Methods for calculating correlation coefficients entered sciences somewhere in the middle of the 19th century but became popular with the works of Pearson (cf., 1896) . He formulated the tasks of statistical data analysis in the following way:

One of the most frequent tasks of the statistician, the physicist, and the engineer, is to represent a series of observations or measurements by a concise and suitable formula. Such a formula may either express a physical hypothesis, or on the other hand be merely empirical, i.e., it may enable us to represent by a few well selected constants a wide range of experimental or observational data. In the latter case it serves not only for purposes of interpolation, but frequently suggests new physical concepts or statistical constants.

( Pearson, 1902 , p. 266).

I think it is especially noteworthy – formula that is searched for, represents observations or measurements – i.e., variables . This fact is so obvious that consequences that follow from it are usually not thought through. The main problem related to use of observations and measurements is that they do not necessarily reflect the reality objectively; they are subjective interpretations of the world by the researcher. This is especially true in the situation when the observation – of external behavior, in psychology – is supposed to reflect operation of the hidden from direct observation construct, mental faculty. Thurstone acknowledged that externally similar behaviors can be based on internally different mechanisms; and he was only interested in finding formulas that express regularities in the external behavior. Pearson essentially did the same; he assumed that with the help of correlations, it is possible to get closer to identification of different causes of external regularities. Pearson also did not aim at describing how these causes operate. He, similarly with Thurstone, was looking for identifying regularities (faculties in Thurstone’s terms) in the observable cause → effect chains without claiming that unique cause, hidden from direct observation, is necessarily identified. This limitation for the aim of statistical analyses can be found in many of his works, as in the following passage, for instance:

We shall now assume that the sizes of this complex of organs are determined by a great variety of independent contributory causes, for example, magnitudes of other organs not in the complex, variations in environment, climate, nourishment, physical training, various ancestral influences, and innumerable other causes, which cannot be individually observed or their effects measured.

( Pearson, 1896 , p. 262).

When Pearson correlated sizes of organs he was, thus, aware that mathematical formulas that reflect certain commonalities in the variation of two variables do not reflect unique roles of individual contributory causes; these causes determine the measured sizes in ways that are not known.

The general form of the question Pearson answered with statistical analyses can be formulated: What is the value of a certain variable when we know a value of another variable that is correlated to the first? An example of this kind of use was provided by Pearson when he reconstructed “the parts of an extinct race from a knowledge of a size of a few organs or bones, when complete measurements have been or can be made for an allied and still extant race.” ( Pearson, 1899 , p. 170).

Correlation, in this case, can be understood as a representation of some abstract cause which “makes” variables to covary. Thus, the same question can be reformulated: Is it possible to discover an abstract cause-like communality of different variables that is expressed as covariation? I think Pearson was very clear in understanding that correlation reflects covariations of appearances; the true underlying causes of covariation, the mechanisms that determine how the covariation emerges, cannot be known with statistical procedures – there are many independent causal agents operating, “which cannot be individually observed or their effects measured.” At the same time, he could interpret covariations between variables in non-mathematical terms; he interpreted them as reflecting common cause. For instance, he concluded on the basis of statistical analyses that fertility and fecundity are inherited characteristics ( Pearson et al., 1899 ) – he, thus, suggested that some non-mathematical factor, inheritance, underlies the correlations he discovered.

Thurstone went a step further and suggested – it is possible to find formulas for expressing patterns of covariations; factor analysis identifies “faculties” or “abilities” that underlie correlation among several variables simultaneously. He was also clear that factor analysis expresses relationships between appearances; possible differences in internal mechanisms that may underlie externally similar behaviors are not reflected in the results of factor analysis.

Limits on the Questions that Can be Answered with the Help of Statistical Data Analysis Procedures

Statistical theories reflect regularities only in appearances.

Pearson was fully aware of the limits of statistical theories. Theory that looks for mechanisms should be clearly distinguished from pure descriptions of regularities in superficial observations; statistical laws… “have nothing whatever to do with any physiological hypothesis” ( Pearson, 1904 , p. 55). According to him, “the statistical view of inheritance is not at basis a theory, but a description of observed facts, with which any physiological theory must be in accord” ( Pearson, 1903–1904 , p. 509). We know from the modern biological theory of inheritance, how correct he was: the statistical laws discovered by him had really nothing to do with the discovery of the structure of DNA, even though they may have directed biologists to look for possible substrate of inheritance. It is also noteworthy that, contrary to Pearson, after discovering the structure of DNA and explaining the biological mechanisms of inheritance, there was no need at all to check whether this theory of structure accords with Pearson’s laws or not; these laws became irrelevant for the theory.

Thurstone was looking for discovering mental faculties with the help of the factor analysis. Similarly with Pearson, he did not assume that discovered faculties can be directly related to mental operations; he did not assume one-to-one correspondence between observed behaviors and mechanisms that underlie them:

The attitudes of people on a controversial social issue have been appraised by allocating each person to a point in a linear continuum as regards his favorable or unfavorable affect toward the psychological object. Some social scientists have objected because two individuals may have the same attitude score toward, say, pacifism, and yet be totally different in their backgrounds and in the causes of their similar social views. If such critics were consistent, they would object also to the statement that two men have identical incomes, for one of them earns when the other one steals. They should also object to the statement that two men are of the same height. The comparison should be held invalid because one of the men is fat and the other is thin.

( Thurstone, 1935 , p. 47)

It was shown above that Thurstone was not asking how mental faculties operate; he was looking for just identification of abilities. There can be, thus, an ability to make money; and this ability is treated the same independently of whether the income is made by earning or by stealing. Statistical procedures used by Thurstone aim at discovering an ability to make income, for instance, but there would be no clue as to the mechanisms of the income-making. So, if there is a phenomenon like income in the world, perhaps factor analysis would be helpful to discover it.

There are, however, reasons to disagree partly with Thurstone’s interpretation of this procedure. He suggested, for example, that incomes based on different sources should be considered to be different if social scientists were fully consistent; he disagreed with this idea. Essentially, Thurstone seems to assume that it is possible to isolate the phenomenon from the world and perhaps study it after isolation as a thing in itself. In the real world, however, a thing that exists completely isolated from the world would be unknowable in principle, because we know the world only being in relation with it. Income is by definition the amount of money or its equivalent received during a period of time. If we analyze the phenomenon of money, we discover that it is a relational phenomenon. Money is a medium of exchange and unit of account; money, thus, is a phenomenon that mediates certain economic relationships. Outside the society, money ceases to be money; it becomes just a physical object. Societies determine relations toward money in much more complex ways than just in economic terms. For instance, in modern democratic societies it would be legally possible to confiscate money if the money turns out to be stolen; but there are no societies where money would be confiscated because it was earned. So, the incomes of two men, for one who earns and for the other who steals, are not the same indeed.

Thurstone would likely – and fairly – reject this critique by telling that “Every scientific construct limits itself to specified variables without any pretense to cover those aspects of a class of phenomena about which it has said nothing” ( Thurstone, 1935 , p. 47). By saying that a factor represents some isolated characteristic of the studied phenomenon, Thurstone retains consistency of his approach. And this is exactly where the weakness of statistical theories lies: these theories are about regularities in appearances with no necessary connection to the underlying mechanisms. Thurstone, similarly with Pearson, was fully aware of this limitation: